NLTK TEXT CLEANER HOW TO

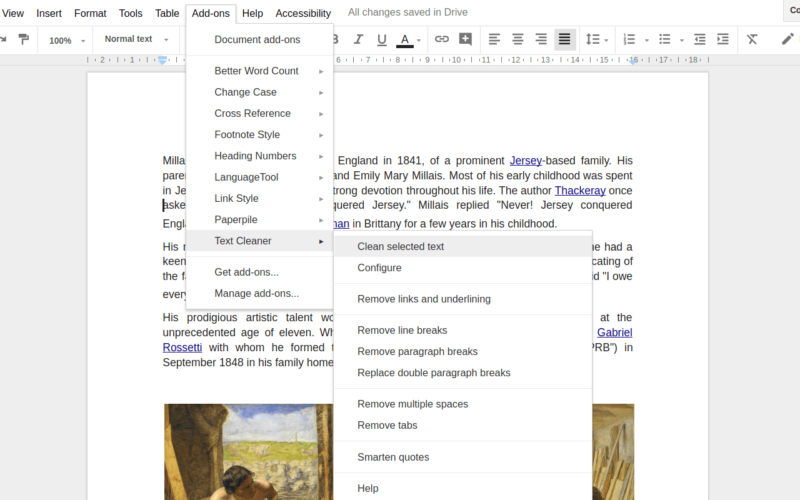

You will need to understand how to use pandas in an efficient manner for text cleaning. However, as the data grows in size (imagine doing this analysis for 50 states worth of data), Here is a quick summary of the solutions we looked at: Text Cleaning Options SolutionĬategorical data can get tricky when merging and joiningĪ lookup table can be maintained by someone elseįor some data sets, performance is not an issue so pick what clicks with your brain. To do text cleaning similar to what I’ve shown in this article. I suspect you are going to find lots of cases in your day to day analysis where you need In my experience, you can learn a lot about your underlying data by taking up the kind ofĬleaning activities outlined in this article. The love-hate relationship many data scientists have with this task. This newsletter by Randy Au is a good discussion about the important of data cleaning and Now that the data is all set up, calling it is simple: Finally, I turned off the regex match by default for a Instead of usingįunction will take care of it all. Store_patterns_2 = Ī useful benefit of this solution is that it is much easier to maintain this list thanįunction is that the original value will be preserved Item is the value to search for and the second is the value to fill in for the matched value.

NLTK TEXT CLEANER SERIES

This function can be called on a pandas series and expects a list of tuples.

where ( seen, default ) else : ser = ser. where ( ~ mask, name ) if default : ser = ser. contains ( match, case = case, regex = regex ) if seen is None : seen = mask else : seen |= mask ser = ser.

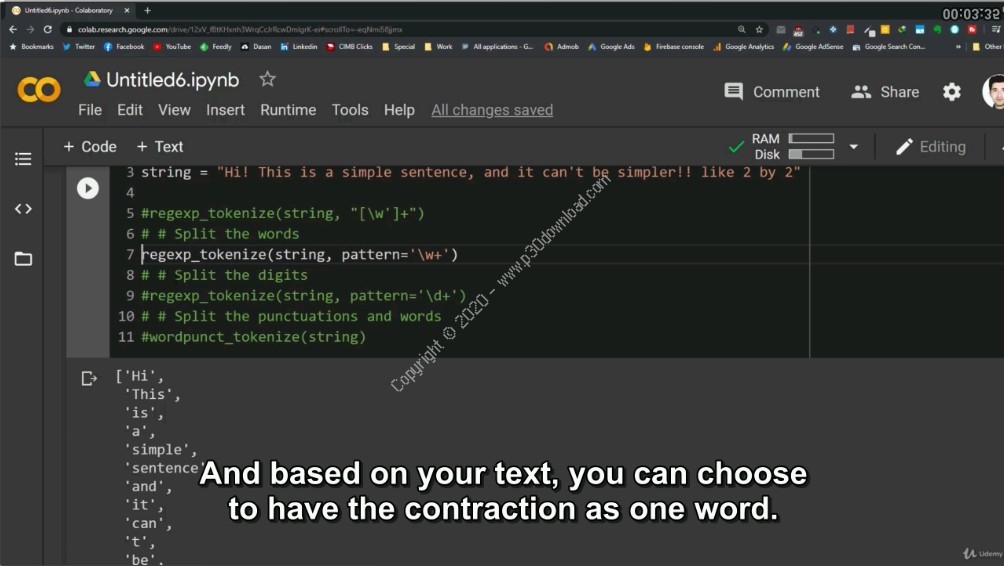

NLTK TEXT CLEANER CODE

Based on code from ser: pandas series to search match_name: tuple containing text to search for and text to use for normalization default: If no match, use this to provide a default value, otherwise use the original text regex: Boolean to indicate if match_name contains a regular expression case: Case sensitive search Returns a pandas series with the matched value """ seen = None for match, name in match_name : mask = ser. I’ve decided to combine into a tuple to more easily keepīecause of this data structure, we need to break the list of tuples into two separate lists.ĭef generalize ( ser, match_name, default = None, regex = False, case = False ): """ Search a series for text matches. One of the big challenge when working withĬonditions and values mismatched. contains ( 'Kwik', case = False, regex = False ), 'Kwik Shop' ) ] contains ( "Bucky's", case = False, regex = False ), "Bucky's Express" ), ( df. contains ( 'Hometown Foods', regex = False, case = False ), 'Hometown Foods' ), ( df. contains ( 'Circle K', regex = False, case = False ), 'Circle K' ), ( df.

contains ( 'Quik Trip', regex = False, case = False ), 'Quik Trip' ), ( df. contains ( 'Target Store', regex = False, case = False ), 'Target' ), ( df. contains ( 'Yesway', regex = False, case = False ), 'Yesway Store' ), ( df. contains ( 'Walgreens', regex = False, case = False ), 'Walgreens' ), ( df. contains ( 'CVS', regex = False, case = False ), 'CVS Pharmacy' ), ( df. contains ( 'Kum & Go', regex = False, case = False ), 'Kum & Go' ), ( df. contains ( "Sam's Club", case = False, regex = False ), "Sam's Club" ), ( df. contains ( "Casey's", case = False, regex = False ), "Casey's General Store" ), ( df. contains ( 'Fareway Stores', case = False, regex = False ), 'Fareway Stores' ), ( df. contains ( 'Walmart|Wal-Mart', case = False ), 'Wal-Mart' ), ( df. contains ( "Smokin' Joe's", case = False, regex = False ), "Smokin' Joe's" ), ( df. contains ( 'Central City', case = False, regex = False ), 'Central City' ), ( df. contains ( 'Hy-Vee', case = False, regex = False ), 'Hy-Vee' ), ( df. To highlight how useful it can be for these data exploration scenarios. It’s not required for the cleaning but I wanted Let’s get started by importing our modules and reading the data.

NLTK TEXT CLEANER DOWNLOAD

Due to the size, youĬan download it from the state site for a different time period. That some of the pandas approaches will be relatively slow on your laptop.įor this article, I’ll be using data that includes all of 2019 sales. This is not bigĭata by any means but it is big enough that it can make Excel crawl. Theĭata set for this case is a 565MB CSV file with 24 columns and 2.3M rows. With that data, you can plan your sales process for each of the accounts.Įxcited about the opportunity, you download the data and realize it’s pretty large.

You to use your analysis skills to see who the biggest accounts are in the state. That shows all of the liquor sales in the state. Your territory includes Iowa and there just happens to be an open data set For the sake of this article, let’s say you have a brand new craft whiskey that you would

0 kommentar(er)

0 kommentar(er)